Why limit the size of your files?

According to Claris, the maximum file size of a Claris FileMaker Pro file is “limited only by disk space to a maximum of 8 TB (terabytes) on a hard disk and OS API capability.” I promise you the practical limits you want to impose on any FileMaker files you manage should be significantly less than that!

Why you should care – and what those limits should be – is a subject that has been buzzing around our hosting and development teams for years when faced with various circumstances – usually unpleasant ones. Recently, our teams got together to draft some best practices and standards for handling large FileMaker files.

This question reminds me of that famous movie exchange between characters Ian Malcolm and John Hammond in “Jurassic Park:”

Malcolm: …You stood on the shoulders of geniuses to accomplish something as fast as you could, and before you even knew what you had, you patented it, and packaged it, and slapped it on a plastic lunchbox, and now you wanna sell it.

Hammond: I don’t think you give us our due credit. Our scientists have done things which nobody has ever done before.

Malcolm: Yeah, but your scientists were so preoccupied with whether or not they could, they didn’t start to think if they should.

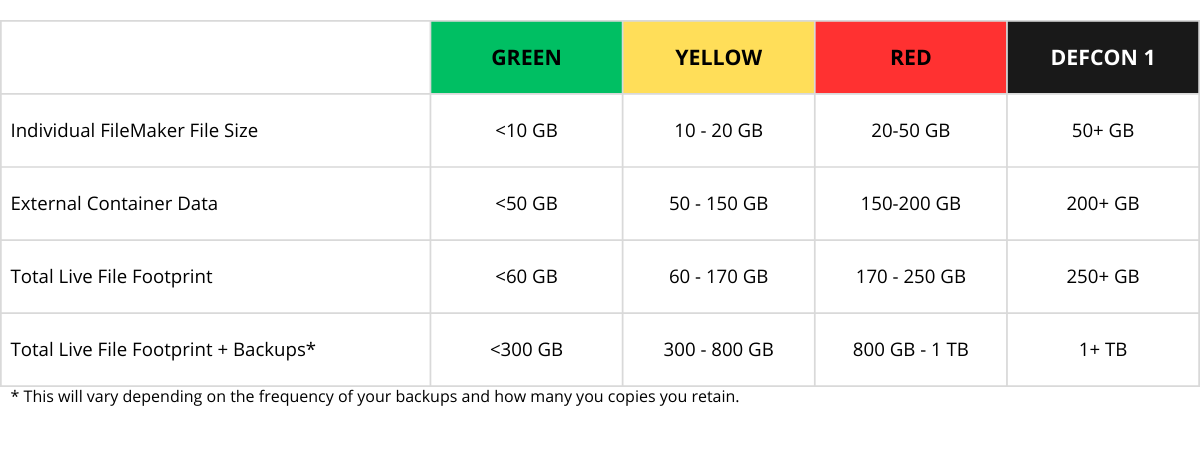

Well, if you aren’t careful, your databases might break free and eat you. Not literally, but we have dealt with enough large FileMaker databases that have crashed or needed to be moved, needed a consistency check, or needed to be manipulated in some way that you might feel like you have been chewed up and spat out. In our experience, there are two things that are consistent with large file sizes:

- Files are more fragile/susceptible to crashing and becoming damaged.

- EVERYTHING you do on the server with large files takes a whole lot longer. Ever had to restart a server “ungracefully” for one reason or another and had to wait for a consistency check that took hours to complete? If the validation process is turned on, your backups can take even longer. With large files, a simple restart to recover from a server hiccup can bring your operations to a halt. What about trying to run backups only to see them still running 8, 10, 12 or even 16 hours after you started them?

To avoid files crashing or taking a long time to load, here are some best practices to follow: Make sure that you are running your FileMaker server on widely available SSD drives. I am not going to go into specialized hardware configurations since this is meant for general purpose database use that you might see in nearly all FileMaker deployments.

If you keep an eye on how much hard drive space is being consumed along with regular trimming of the data, you won’t need a specialized configuration.

Look at what data is being uploaded and stored in your FileMaker file. Don’t insert a 7MB picture/scan when a 250K thumbnail will suffice. If you need to address this after the fact, you can use tools like the Monkeybread plug-in to loop through records to resize and/or compress images already loaded in systems.

It is important to consider how data is stored. When images, PDFs, videos, etc. are uploaded to FileMaker, they are stored in container fields. This container data can be stored either internally to the FileMaker file or externally to the FileMaker server itself. Storing container data inside the FileMaker database is certainly the most convenient, but usually, and eventually, it will need to be moved externally.

If after you do that and all your files are above our recommended limits, it is likely time to store the files somewhere off the FileMaker server altogether. This can be accomplished by creating a process to upload and link the database records to those files stored elsewhere — outside of your FileMaker file and off your server.

Since our hosting infrastructure is based on Amazon Web Services (AWS), our preferred method for this type of upload process is to use AWS S3 storage. AWS S3 has an API for making the connections and data transfer. We also have our Cloud Manipulator plug-in that can assist you in coding an integration with S3.

By moving these large files to an AWS S3 file structure, you take a burden off of your FileMaker Server. Your FileMaker file and backups run more smoothly, and restarts and consistency checks are quicker.

There are many small adjustments you can make to improve the overall health of your FileMaker file. Putting your file on a restricted diet – by limiting the size of your uploads, file types, or data location – will make your system healthier.

For information about our File Size Reduction Services, Cloud Manipulator Plug-in, or our FileMaker Hosting Services, please contact us at sales@productivecomputing.com.

— Keith Larochelle is the author of this blog and the Chief Financial Officer and co-owner of Productive Computing, Inc.

Other products and services Productive Computing, Inc. offers:

- What can PCI do for you? – Overview Video of Productive Computing Services

- Health Assessment – report on the condition of your system and server

- Consulting and Development – services billed by the hour

- Maintenance and Support – services billed monthly

- Packaged Services – flat fee for Health Assessment, Server Installation, etc.

- Plug-ins – tools to integrate with QuickBooks, Outlook, Google, etc.

- Core CRM Pro – customizable and scalable CRM built on FileMaker

- Claris and FileMaker Licensing – discounts on new seats and renewals

- FileMaker and QuickBooks Hosting – options to host your files in the cloud

- Productive Computing University – free and paid online video training courses for beginner to advanced users and developers